by James Delhauer

Lux Machina began as little more than a group of programmers with a dream to transform the production world by developing and engineering cutting-edge technical solutions for their clients. In time, the company developed into a team of bold industry explorers, nerdy systems architects, and seasoned expert technologists. Today, they are responsible for some of the most impressive and jaw-dropping virtual productions in Hollywood and, at the core of their team, are members of Local 695.

Virtual production as it currently exists is a combination of techniques developed for VFX workflows and in-camera SFX techniques that have been developed since the creation of motion pictures. In-camera visual effects (ICVFX) is a subset of virtual production that is the natural evolution of the rear-screen process shot work that Local 695 engineers have done for decades. In its most primitive form, the process shot involves shooting an actor or actors in front of a translucent scrim, which is then hit with a projected image from behind to create the illusion of a three-dimensional background behind the talent. The process was first developed by Harold V. Miller (who was the first in what is currently a three-generation Local 695 family) and the process shot’s first known use was in the 1930 science fiction film Just Imagine. Though crude at the time, the process allowed filmmakers to create a believable illusion in-camera and, with almost ninety years of innovation, this practice has grown much more sophisticated. Lux Machina has pioneered its development, which can be seen in the 2013 film Oblivion. If you are a cinephile and have not seen this film, I cannot recommend it highly enough. Almost a decade later, the visuals are still nothing short of breathtaking.

For the film, Local 695 member and Lux Machina President Zach Alexander designed and operated a 360-degree projection system in order to create The Skytower—a futuristic science lab located miles above the Earth’s surface. By using a media server system and stitching together images from twenty-one projectors, the team was able to create a fully immersive panoramic set that allowed the cast and crew to feel as though they really were miles high in the sky. This advanced process subverted the need for the costly and time-consuming visual effects required by a green screen setup and post-production artists would no longer be required to remove the green hue from objects reflecting the color or from the actors’ skin tones. Moreover, the actors were able to fully bring themselves into the film’s world when they could see the scenic backdrop all around them. The benefits of this workflow are too numerous to count.

Following Oblivion’s success, the team at Lux Machina continued to innovate and refine their workflow, bringing their talents to productions like The Titan Games, The Academy Awards, The Emmys, multiple Star Wars films, and this year, the Sony Pictures film Bullet Train.

I had the privilege of sitting down with one of the team’s best and brightest, Local 695 member Jason Davis, who serves as a Media Server Supervisor and whose storied work history includes titles like Solo: A Star Wars Story and Top Gun: Maverick. Jason served as the Media Server Supervisor on Bullet Train, where he was responsible for overseeing the implementation of the LED wall systems that allowed Director David Leitch to achieve his vision for the film.

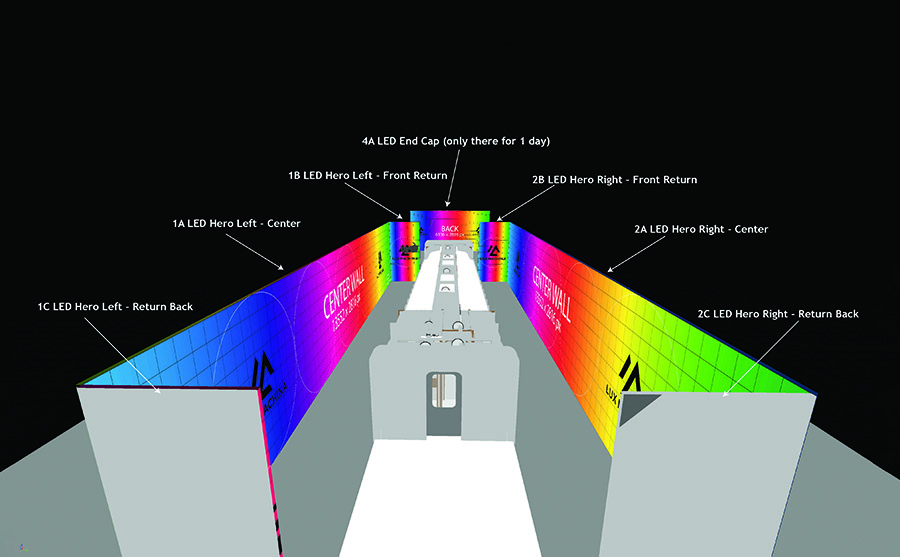

For this production, the team made use of a video wall system built by Sweetwater, with the Lux Machina team taking responsibility for ingesting and playing back assets to the wall. On set, Jason operated a series of media servers in order to sequence and ingest clips into the system. The LuxMC team made use of a media server to play assets provided by production back to the LED wall. That resulted in an interactive image that synchronously worked with the camera department.

“It’s almost like video editing,” Jason told me when we spoke. “Even though the art team sends over the files, you need to be able to manipulate them in the system.”

Drawing from experience on other productions like Solo, Jason and his team utilized what is called a Disguise Media Server (formerly called the D3 Media Server), which includes an integrated generative content creation platform called Notch. Once assets were converted into Notch’s proprietary Notch LC file codec (which required a top of-the-line Ryzen Threadripper transcode machine in order to process the large and unbelievably dense files), the system was capable of making on-the-fly adjustments to scale, position, luminosity, and coloration of the picture.

“Thankfully, we’d already worked out most of the kinks in-house and on projects like Solo and Mandalorian, so the workflow was pretty refined coming into this one,” Jason explained.

This had confused me. “Was Solo a video wall shoot?” I asked.

“No, we were still using laser projectors on that one,” he clarified. “But we were still using Disguise servers on that one too. So there was a lot of overlap. The mapping is obviously different, but the playback part is pretty similar.”

“What were some of the big challenges on this one?”

“Honestly, the biggest thing was COVID. Communication on set was very different. It was harder to troubleshoot issues, or bring on extra help because no one could come on set without being tested. But as dumb as this sounds, the hardest thing to deal with was the face shields. The way the plastic reflects light meant that there was always a glare right in my eyes and I’d have to crane my neck or look at the screen funny just to see what was right in front of my face. Pretty funny actually. But frustrating and a little time-consuming.”

“That’s funny. How do you feel the film turned out?”

“I think it looks great. We were really pleased with how everything came together.”

“You pick up any cool tricks for the next film?”

“We’re always refining the process and trying to improve it. What we learned on Star Wars helped us make Bullet Train better and what we did on Bullet Train just helped us make Black Adam better.”

When I asked Jason how an interested member might go about getting involved with virtual production, his advice was to get hands-on with the gear as quickly as possible. “This isn’t something you can really learn online. You just need to get in front of the gear and start learning it,” he told me. “As far as the software side of things, start learning Unreal. Learn how to run and manage media servers. Look at Disguise and Notch. These are the tools everyone is using and you’ll have a better chance of standing out if you already have an idea of how those products work.

I’d like to thank Jason for taking the time to sit down with me and share his experiences working on Bullet Train and in virtual production as a whole. This is a growing area within our Local and professionals like him and Zach Alexander are helping to blaze the trail that 695 members will walk for years to come. To see Jason’s work for yourself, check out Bullet Train on Amazon or home media.