When I started my career in playback, the job consisted of playing back pre-rendered video content into TV and computer monitors. Fast-forward sixteen years and our culture has become saturated with display technology. With the majority of people walking around carrying at least one, and often a few screens, on their various personal devices at all times, it’s becoming commonplace to take for granted that images and video should just magically appear on demand. So often the question we’re greeted with on set becomes “We have this new mobile device, can you make it work before it plays tomorrow?” While the playback job has always required creativity and flexibility, the pace of modern technology has pushed things to a new level where, to remain viable in this age of rapid growth, we must blend the roles of traditional playback operator with a hefty dose of software engineering.

Having grown up enamored with technology and gaming, I have a natural tendency to want to figure out how things work. This has been a real blessing in my professional life as I’m driven to want to experiment with and develop for new devices as they appear on my radar. Up until recently, we’ve been making do with pre-made mobile apps and a basic video looping program I developed a few years ago. Now that devices and technology have both evolved to a point where people are able to enjoy advanced video gaming on their phones, it’s become apparent to me that leveraging video game engine software will allow me to become more agile and able to work with new devices as they hit the market. Building content across platforms (iOS, Android, Mac, Windows, etc.) has been a major challenge when working with the current tools used in computer playback. Adobe’s flash program works on some mobile devices but is limited and cannot take full advantage of the device’s hardware and their Director program (which hasn’t been updated in years) can only function on Mac or PC and has very limited support from Adobe.

To excel in the fickle gaming community, game engine developers know that they must harness every bit of a device’s hardware capabilities in order to give the player the best graphics experience possible. To maximize profits, they also need their games to function in a cross-platform environment. Knowing that the graphics I need to build for playback work essentially the same way as a game, it made sense to me to move my development work into a game engine and the one I ultimately chose was Unity 3D. It allows me to be able to display interactive graphics that can be deployed cross-platform and controlled either remotely or by the player/actor in the scene. While I’ve gotten a few funny looks on set for triggering playback with what looks like an Xbox gaming controller, at the end of the day, the only difference between gaming and this kind of playback is that my software does not keep score … at least not yet!

When Rick Whitfield at Warner Bros. Production Sound and Video Services approached me to do the Sony Pictures movie Passengers, we both felt that it would be the right fit for what I had begun to develop in Unity. The sheer number of embedded mobile devices that required special interactivity with touch as well as remote triggering necessitated a toolset that would allow us the speed and flexibility to manage and customize the graphic content quickly. Early in preproduction, Chris Kieffer and his playback graphics team at Warner Bros., worked with me on developing a workflow for creating modular graphic elements that could be colorized and animated in real time on the device as well as giving us a library of content from which we could generate new screens as needed. Along with this, we were able to work closely with Guy Dyas and his Art Department on conceptualizing how the ship’s computer would function, which allowed us to marry the graphics to the functions in a way that made sense. This integration with the Art Department’s vision was further enabled by their providing static design elements to us so that we could create a cohesive overall aesthetic.

As part of the futuristic set, there were tablets embedded in the walls throughout the corridors. Everything from door panels to elevator buttons and each room’s environmental controls were displayed on touchscreens. Due to the way the set was constructed, many of these tablets were inaccessible once mounted in place. This meant that once the content was loaded, we had to have a way to make modifications through the device itself in case changes were needed. The beauty of using a game engine is that it renders graphics in real time. Whenever color, text, sizing, positioning or speed needed to be changed, it could be done live and interactively without causing the kind of delays in the shooting schedule that would have resulted if we’d had to rip devices out of the walls. This flexibility was so attractive to both the production designer and director that tablets began popping up everywhere!

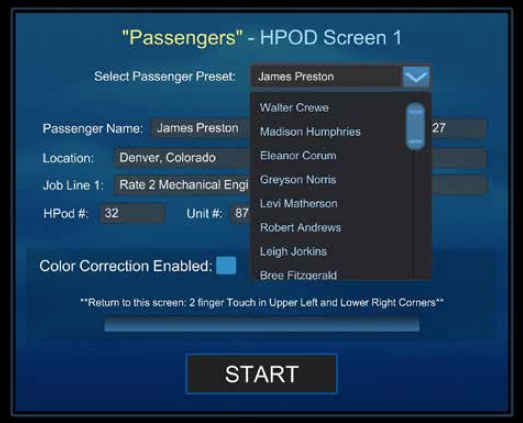

Screen shot of Unity development software of a hibernation bay pod screen

When we got to the cafeteria set, we were presented with the challenge of having a tablet floating on glass in front of a Sony 4K TV that needed to be triggered remotely as well as respond to the actor’s touch. As the storyline goes, Chris Pratt’s character becomes frustrated while dealing with an interactive fooddispensing machine and starts to pound buttons on the tablet. We needed that to be reflected in the corresponding graphics on the larger screen as they were part of the same machine. Traditionally, this would involve remotely puppeteering the second screen to match choreographed button presses. With the pace at which he was pressing buttons, it made more sense to leverage the networking capabilities of Unity’s engine to allow the tablet to control what’s seen on the TV. This eliminated the need for any choreography and allowed for Chris to be much more immersed in his character’s predicament as well as eliminated any takes having to be interrupted by out-of-sync actions.

From a playback standpoint, one of our most challenging sets was the hibernation bay. With the twelve pods containing four tablets per pod plus backups, there were more than fifty tablets that needed to be able to display vital signs for the characters within the pods. Since extras were constantly shifting between pods, we had to have a way to quickly select the corresponding name and information for that passenger. This was accomplished through building a database of cleared names that could be accessed via a drop-down list on each tablet. Doing it this way, Rick and I could reconfigure the entire room in just a few minutes. Because the hero pod that houses Jennifer Lawrence’s character was constructed in such a way that we could not run power cables to the tablets, we had to run the devices solely on battery power. This required me to build into the software a way to display, without interrupting the running program, the battery’s charge level as well as Bluetooth connectivity status so that we could choose the best time to swap out devices so as not to slow down production.

One of the bonuses to working in most 3D game engine environments is having the tools to write custom shaders to colorize or distort the final render output. This gives the ability to interactively change color temperature to match the shot’s lighting as well as adding glitch distortion effects in real time without needing to pre-render or even interrupt the running animation. Many of our larger sets like the bridge, reactor room and steward’s desk needed to have all the devices and computer displays triggered in sync. Some scenes called for the room to power down, then boot back up, as well as switch to a damaged glitch mode based on the actions within the scene. Although I had been developing a network playback prototype, due to the production’s time constraints, we ultimately ended up having to trigger the computer displays and mobile devices on separate network groups.

Though I’ve since worked out the kinks in crossplatform network control, this served as a reminder that when working with new and untested technology, things can and will fail you. Especially when you’re using development tools that weren’t designed to function as an on-set playback tool. However, the growth of technology is only getting faster. Soon, we will be seeing curved phone displays, flexible/bendable and transparent screens, as well as all manner of wearable devices. And that’s only in the next few years. What happens beyond then is anyone’s guess.

All that said, you can have all the technology in the world but without a great team, it doesn’t matter. Having Rick Whitfield as a supervisor with his wealth of experience and decades of knowledge was invaluable. His years of having to think way outside the box to accomplish advanced computer graphic effects in an age in which actual computers couldn’t create the necessary look allowed him to break down any issues into their simplest, solvable forms. The talented graphics team at Warner Bros., Chris Kieffer, Coplin LeBleu and Sal Palacios, pulled out all the stops when it came to creating beautiful content for the film. The sheer amount of content they produced and the willingness with which they built elements in such a way that made real-time graphics possible borders on being a heroic feat. I consider myself extremely fortunate to have been a part of their team on Passengers.

As much as I am thrilled to be standing on the bleeding edge of technology in getting to merge what I do in playback with new advances like gaming engines, I’m even more excited to think of the day when this will all be old hat and we’ll be on to something newer and even more exciting.