by Scott D. Smith, CAS

Author’s note: After a brief detour to examine some of the early history pertaining to Local 695, we now continue with our regularly scheduled program. We will continue to re-visit the continuing history of the Local in future issues.

Introduction

In the previous installment of “When Sound Was Reel,” we examined the proliferation of the “Widescreen Epic,” a format developed by the major studios to counteract the rise of broadcast television in the early 1950s. Although widescreen films would continue to be produced through the late 1960s, studio bean counters were becoming increasingly critical of these films, which typically involved significant costs for 65mm camera negative, processing, sound mixing and magnetic release prints, not to mention the large and expensive casts. As the novelty of a widescreen presentation with stereophonic sound began to wear off, studios were rethinking the costs associated with such productions.

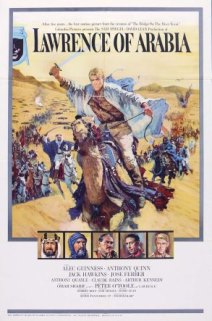

Despite the success of a few 70mm releases during the 1960s, notably Lawrence of Arabia, 2001: A Space Odyssey and Woodstock, by 1970 the format had largely run its course. With the development of liquid gate printers, it was now possible to achieve a good blowup from original 35mm negative. When figuring the efficiencies of working with standard 35mm camera gear, no studio would consider the cost of shooting in 65mm worth the effort. Even Dr. Zhivago, released by MGM in 1965, was blown up from a 35mm camera negative.

However, the epic films established a new benchmark in quality and audiences came to expect something more than a 1.85 (aspect ratio) picture with mono sound, presented with projection equipment originally developed in the 1930s and ’40s. Moreover, by this time, quality home stereo equipment was becoming widely available and many consumers owned reel-to-reel tape decks that surpassed the fidelity of even the best 35mm optical track. If studios expected to provide a premium entertainment experience that justified the expense of the ticket, they would need to improve the overall quality of their films.

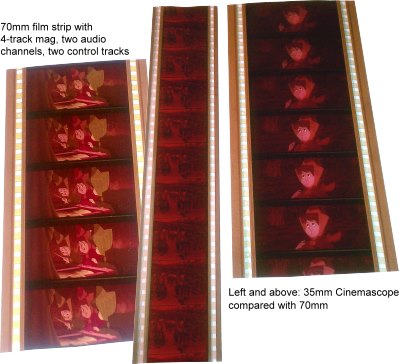

Raising the quality standard of 35mm prints to meet the expectations of road show pictures shot in 65mm (and released in 70mm with magnetic soundtracks) was a considerable challenge. While 70mm releases, even those produced from 35mm negatives, continued to be considered the “goldstandard” for big-budget releases, by the early 1970s, the advances made in print stocks, laboratory procedures and optics were beginning to shrink the gap in terms of the picture quality that could be derived from a good quality 35mm print vs. a 70mm blowup. However, the issues in regards to sound remained. Although 4-track magnetic Cinemascope prints were still in fairly wide use during the 1960s, there were considerable costs for striping and sounding onto the special “Foxhole perf” stock (with smaller sprocket holes to allow space for the mag tracks). In addition, theater owners were balking at the costs associated with replacing the 4-track magnetic heads, which wore out quickly (this was before the advent of ferrite heads).

Advances in the quality of 35mm mono optical tracks had been mostly in the area of negative and print stocks. These yielded slight improvements in frequency response and distortion but were still a long way from the quality that the average consumer could derive from a halfway decent home stereo system of the era. For the most part, significant development in optical tracks had stalled out in the 1950s, at the time most studios were directing their efforts at widescreen processes. Most of the optical recorders still in use by the early ’70s were derived from designs dating back to the 1940s, and had seen little innovation, except in the area of electronics, which had been upgraded to solid state.

Multi-Track Magnetic Recording

While the film industry was wrestling with issues of how to improve sound reproduction in a theatrical environment, other innovations were taking place in the music industry. Chief among these was the advent of multi-track analog recording, a process largely attributed to guitarist Les Paul. Since the 1930s, Les Paul had experimented with multi-layered recording techniques, whereby he could record multiple instances of his own performances, playing one part and then adding subsequent layers. His first attempts used acetate disks which, predictably, resulted in poor audio quality. Later on, Les Paul worked with Jack Mullin at Ampex, who had been commissioned by Bing Crosby to develop the Ampex 200 recorder. Recognizing the obvious advantages of working with magnetic tape as opposed to acetate disks, Les Paul took the Ampex 200 recorder, added an additional reproduce head in advance of the erase and record head, and developed the first “sound-on-sound process.” The downside of this, of course, was that each previous recording would be destroyed as a layer was added. Seeking a better solution, in 1954 Les Paul commissioned Ampex to build the first 8-track one-inch recorder, with a feature called “Sel-Sync®,” which allowed any track to be reproduced through the record head, maintaining perfect sync with any newly recorded material and not destroying the previous recording. This technique would go on to become the mainstay of multi-channel recording for both music and film well into the 1980s.

In this regard, Les Paul and the engineers at Ampex were true pioneers, developing techniques that would forever change the way that music was recorded. There was, however, one small problem. Noise.

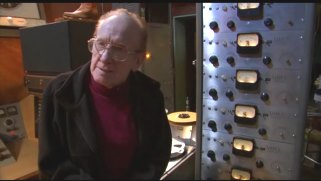

Enter Ray Dolby

About the time that Jack Mullin and his team were improving audio recording, another Ampex engineer, Ray Dolby, a bright fellow from Portland, Oregon, was working on the early stages of video recording. Armed with a BS degree from Stanford University (1957), Dolby soon left Ampex to pursue further studies at Cambridge University in England upon being awarded a Marshall Scholarship and NSF Fellowship. After a brief stint as a United Nations advisor in India, he returned to London in 1965 and established Dolby Laboratories. His first project was the development of a noise reduction system for analog recording, which was christened as “Dolby A.” This multi-band encode/decode process allowed program material to be compressed into a smaller dynamic range during recording, with a matching expansion during playback.

The first processor that Dolby designed was a bit of a monster. The Model A301 handled a single channel of processing and took up five units (8.75 inches) of rack space! Needless to say, it didn’t catch on in a huge way for multi-channel work. However, it made inroads into the classical recording market, especially in the UK. Understanding that the real market would be in multi-channel recording, Dolby quickly followed up with the release of the model 360/361 processors, which used a single processing card (the Cat 22), and took only one unit of rack space per channel. While this cut down the amount of rack space required for eight or 16 channels of noise reduction, it was still a bit unwieldy.

In 1972, Dolby took the development a bit further, with the release of the Dolby M system, which combined 16 channels of Cat 22 processing cards in a frame only eight rack units high. By utilizing a common power supply and smaller input/output cards, this system provided a much more cost-effective solution to multi-track recording.

Dolby Consumer Products

About two years after the release of the Dolby A301 processor, Henry Kloss (of KLH fame) persuaded Dolby to develop a simplified version of the Dolby A system for consumer use. In response, Dolby developed what is now known as the “Dolby B” system, which has found its way into millions of consumer products over the years. Unlike the Dolby A system, it utilized a single band of high-frequency companding, designed to overcome the most conspicuous defects of consumer recorders, and required a minimal number of components.

Having firmly established itself in both the professional and consumer music recording market, Dolby turned to the next challenge: film sound recording.

The “Academy Curve”

As the enthusiasm for releasing films in 35mm 4-track and 70mm 6-track magnetic waned, producers and engineers in Hollywood began to search for other solutions to improve film sound. During his initial evaluation of the process of film sound recording, Dolby determined that many of the ill’s associated with mono optical soundtracks were related to the limited response (about 12.5 kHz on a good day), as well as the effects of the “Academy Curve,” which had been established in the late 1930s. To understand how this impacted film sound, one needs to look at the development of early cinema sound systems, many of which were still in use up through the 1970s. Most early cinema sound systems (developed by RCA, Western Electric and RCA), had paltry amplification by today’s standards. In the early 1930s, it was not unusual for a 2000-seat house to be powered by a single amplifier of 25 watts or less! To be able to obtain a reasonable sound pressure level required speaker systems of very high efficiency, which meant that horn-based systems were the order of the day. Although quite efficient, most of these early systems had severely limited HF response. This was OK, though, as it helped to compensate for the problems of noise from the optical tracks.

However, compensating for the combined effects of high frequency roll-off and noise from optical tracks meant that high frequencies needed to be boosted during the re-recording process. Typically, this involved some boost during re-recording with further equalization when the optical negative was struck. While this helped to solve the problems associated with noise and HF roll-off during reproduction in the theater, it also introduced significant HF distortion, which already was problematic in the recording of optical tracks. Excessive sibilance was usually the most glaring artifact.

While the development of new cinema speaker systems in the mid- 1930s (most notably the introduction of the Shearer two-way horn system in 1936) improved the limited response of earlier systems, the HF response was still limited. This was due primarily to the combined effects of optical reproducer slit loss, high frequency losses from speakers located behind perforated screens, and amplification that was still anemic by today’s standards.

Engineers of the Academy, working cooperatively with major studios, put into place a program to standardize sound reproduction in cinemas. Recognizing that many theaters still employed earlier sound systems with limited bandwidth, the Academy settled on a compromise playback curve that would not overly tax these systems. They settled on a playback curve at the amplifier output that severely rolled off around 7 kHz. This is about the quality of AM broadcast radio. Thus was born the “Academy Curve” which would be the standard for about 40 years.

The Academy Curve Gets a Makeover

In 1969, an engineer by the name of Ioan Allen joined Dolby Laboratories and quickly began a systematic examination of the entire cinema reproduction chain. Working with a team of four engineers, Allen examined each step in the sound recording process, including location production recording, re-recording, optical recording and subsequent theatrical release. Some of what he found was surprising. Although magnetic recording had been introduced to the production and re-recording stages of film sound recording in the early 1950s, the advantages of the superior response and S/N ratio were largely negated by optical soundtracks and theater sound systems. While Allen and Dolby ultimately determined that optical tracks could be improved upon, trying to change the standards of the industry overnight was a huge order. Allen and Dolby engineers decided first to test some theories by addressing the production and re-recording part of the chain, which began with work on the music for the film Oliver in 1969.

Following those tests, Dolby A noise reduction was employed for some of the music recording on Ryan’s Daughter, which was to be released in 70mm magnetic. However, Allen and the engineering team at Dolby were frustrated by the fact that very few of these improvements actually translated into improved sound reproduction in the theater. Seeking to solve the issues related to the limited quality of the optical tracks of the day, Dolby Labs arranged to make a test using one reel from the film Jane Eyrewith Dolby A applied to the optical soundtrack. The results were rather disappointing; the noise reduction did nothing to compensate for the limited HF response and audible distortion.

During the mix of Stanley Kubrick’s film, A Clockwork Orange, Allen and Dolby convinced Kubrick and composer Walter Carlos to use the Dolby A system for premixes. However, the final release of the film was still in Academy mono. It was during these tests at Elstree Studios that Allen and Dolby determined that limitations of the Academy curve were to blame for many of the problems associated with mono optical tracks. Allen found that the measured room response at Elstree main dub stage (using Vitavox speakers, a two-way system comparable to the Altec A4) was down more than 20 dB at 8 kHz! This was in line with earlier findings by others. To compensate for this, post mixers would have to severely roll off the low end of most tracks and typically boosted the dialog tracks at least 6 dB in the area from about 3 kHz to 8 kHz. Predictably, this severely exacerbated the problems of distortion in the optical track, which typically had a low-pass filter in the system around 10–12 kHz to control distortion and sibilance. When one looked at the chain in its entirety, it was obvious that a huge amount of equalization was taking place at each of the various stages.

Around this time (late 1971), one-third octave equalizers and higher power amplifiers, were becoming available and being used in music recording. Notable among these was the Altec model 9860A one-third octave equalizer and 8050A realtime analyzer. Along with improvements in crossover design, these developments in room equalization and measurement technologies brought a new level of sophistication to auditorium sound systems and studio monitors alike.

With the advent of one-third octave equalization and good measuring tools, Allen, along with Dolby engineers, conducted further tests at Elstree Studios in late 1971 through 1972. The first thing they did was to position three KEF monitors in a near field arrangement (L/C/R) about six feet in front of the dubbing console. Based on a series of measurements and listing tests, it was determined that these were essentially “flat,” requiring no equalization.

They then inserted one-third octave equalizers into the monitor chain of the standard Vitavox behind-the-screen theater monitors and adjusted the equalization for the best subjective match to the KEF monitors located in the near-field position. While they were not surprised to find that the low-frequency response and crossover region needed to be corrected, they were rather miffed by the fact that the best subjective match between the near-field monitors and the behind-the-screen system indicated that the listeners preferred a slight roll-off in the high frequencies of the larger screen system. This was attributed to the psycho-acoustic effect of having both the picture and sound emanating from a faraway source (about 40 feet, in the case of the Elstree stage), as well as the effects of room reverberation, coupled with HF distortion artifacts. At the end of the day, however, they determined that a flat response for the screen system was not a desirable goal. This was a good thing, as it was virtually impossible to achieve in the real world.

Theater Sound in the Real World

About two years prior to the work that Allen and Dolby engineers conducted at Elstree, some significant research on sound reproduction in the cinema was published in three papers in the December 1969 issue of the SMPTE Journal. First among these was a paper somewhat dryly entitled “Standardized Sound Reproduction in Cinemas and Control Rooms” by Lennart Ljungberg. This was most notable for its introduction of the concept of “A-chain” and “B-chain” designations to cinema sound systems, with the “A-chain” representing all parts of the reproducer system up to the source switch point (i.e.: magnetic sound reproducer, optical reproducer, non-sync sources, etc.) and the “B-chain” comprising everything from the main fader to the auditorium system.

In the same issue were two other papers, one from Denmark, titled “A Report on Listening Characteristics in 25 Danish Cinemas” by Erik Rasmussen, and another from the UK, “The Evaluation and Standardization of the Loudspeaker-Acoustics Link in Motion Picture Theatres” by A.W. Lumkin and C.C. Buckle. Using both pink noise and white noise measurements, both of these papers presented some of the first modern evaluations of cinema acoustics and loudspeaker systems, and defined the challenges in the attempt to mix a soundtrack that could be universally presented in varying theaters. It also provided the basis for what would later become known as the “X Curve,” which would define a standardized equalization curve for cinemas worldwide (or, at least, that was the intent).

Standards? Who Makes These Things Up?

The origination of the “X-Curve” dates back to May of 1969, when engineers associated with the SMPTE Standards Committee held a meeting at the Moscow convention in an attempt to codify international standards related to film sound reproduction. The first draft standard produced by this committee called for a response that was down 14 dB at 8 kHz, using either pink noise or white noise inserted at the fader point in the chain (thus removing the “A-chain” characteristics from the final results). While this was a good start in standardizing theater reproduction characteristics, it was still a long way from the “wide range” playback standard that Dolby engineers envisioned. Work continued for another three years, during which time some significant wrangling occurred within the various standards committees. It would take until 1977 for an International Standard to be approved, which subsequently became the basis for SMPTE Standard 202M, defining the characteristics for dubbing rooms, review rooms and indoor theaters.

In 1982, the standard was modified to include a different EQ characteristic based on room size and reverberation time, taking into account some of the research that Allen and the Dolby engineering team had originally conducted 11 years ago at Elstree Studios. This standards stuff takes time…

At Last, Some Improvements

Largely as a result of the early work related to the improvement of theater sound reproduction standards, Dolby was able to showcase some material that showed off the capabilities of an improved cinema sound system. The first of these was a demo film titled A Quiet Revolution, which Dolby produced in 1972 as a presentation piece aimed primarily at film industry execs. This was one of the first films released which had a Dolby A encoded mono optical track, and was intended to be played back on systems which had a modified optical sound reproducer with a narrower slit that would extend the HF response. The first Dolby encoded feature film, the movie Callan, premiered at the Cannes Film Festival in 1974. Although the film received only a limited release, it did serve as a good demo for EMI and Dolby in their efforts to improve the quality of standard mono optical soundtracks. However, these efforts would soon be overshadowed by the next development of optical sound recording systems.

Next installment:

Dolby Stereo Optical Sound