by Scott D. Smith CAS

In the previous installment of “WSWR,” we examined the development of Dolby noise reduction and its application to film sound recording. This issue looks at the further work done by engineers at Eastman Kodak, RCA and Dolby Labs in relation to film sound during the 1970s.

The Problem

Ever since the production of Disney’s Fantasia in the 1930s, studios and film producers had been looking for a low-cost method to distribute release prints accompanied by high-quality multi-channel soundtracks. The first multi-channel optical sound system developed for Fantasia in 1940 proved so costly (at least $45,000, in 1940 dollars) and cumbersome that only about a dozen road show engagements were mounted utilizing the full stereo sound system (outside of its initial 57-week run at New York’s Broadway Theater). While Fox’s Cinemascope system with four-channel magnetic stripes offered a less costly alternative (about $25,000), it still required the striping and sounding of prints on special “Foxhole Perf” film base and constant maintenance of the projection system mag heads. This applied to Todd-AO 70mm six-track systems as well, which only saw use in road show engagements of big-budget studio releases. By the end of the 1950s, research pertaining to improved film soundtracks was pretty much at a standstill and interest in multi-channel film exhibition waned. The exceptions were a few landmark films such as Woodstock and 2001: A Space Odyssey.

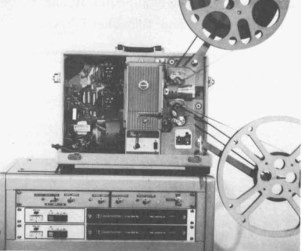

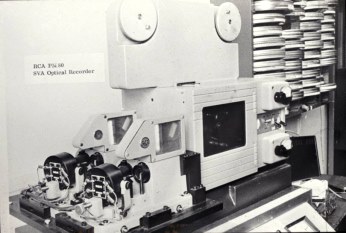

Original RCA stereo variable area optical recorder used at Elstree Studios in 1974. This was the same recorder used at Eastman Kodak for the 16mm stereo experiments, but converted to 35mm. (Photo courtesy of Ron Uhlig/SMPTE)

In the meantime, the general public was enjoying high-quality album fare on quarter-inch tape in the comfort of their homes. With the advent of consumer reel-to-reel recorders, record companies began releasing albums in quarter-inch stereo and even quadraphonic tape formats. The quality of a standard 35mm mono Academy soundtrack reproduction was rather dismal in comparison, especially when played through loudspeaker systems designed in the 1940s. Clearly, there was a real disconnect in the marketplace.

Sample of 16mm stereo optical negative (Photo courtesy of Ioan Allen-Dolby Laboratories)

Nearly a decade would pass before any further work pertaining to multi-channel sound was mounted. The medium of optical soundtracks hadn’t really seen any significant improvement since the 1940s, except for incremental improvements in film stocks and minor upgrades to the recording chain. The optical film recording transports made by both RCA and Westrex all dated back to original systems designed in the 1930s and ’40s.

While the Academy Research Council had conducted work on a push-pull color three-channel optical sound system in 1973, for various reasons it was deemed impractical at the time. At the same time, efforts at improving the quality of 16mm optical sound were undertaken by Ron Uhlig at Eastman Kodak, with help from Jack Leahy and RCA engineers. Their focus, however, was on technology for producing 16mm stereo variable area optical soundtracks, which was intended to compete with Sony’s three-quarter-inch U-Matic video format in the industrial film and educational markets (still a substantial source of revenue for Kodak). Despite the fact that the system never gained any traction in the commercial marketplace, their work did pave the way for similar developments pertaining to 35mm optical sound recording.

One of the key problems related to 16mm stereo optical tracks was the dismal signal-to-noise ratio which, even in mono, was pretty poor: about 50 dB “A” weighted on a good day. Splitting the area used for the 60-mil wide soundtrack into two 25-mil tracks (with 10-mil track separation) just exacerbated the issue. The application of the consumer Dolby B improved upon this by 6 dB, but it was still a far cry from the quality of even an average mag stripe print. Although the Dolby A multi-band processor could improve on this, it would mean a significant additional cost for the projection systems typically employed in the industrial/educational markets. In addition, the inherent constraints of 16mm optical limited the response of release prints to about 7 kHz at best. With few market prospects for the system, RCA and Kodak eventually abandoned their work for 16mm soundtracks.

35mm Stereo Optical Sound… A Long Time Coming

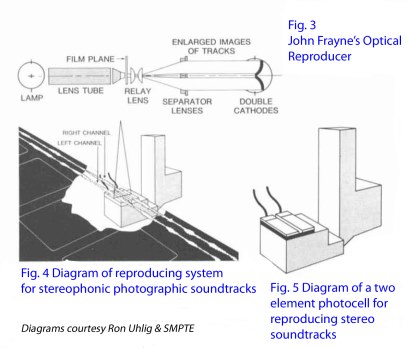

While stereo optical sound was nothing new (Alan Blumlein had developed a system in 1934 and John Frayne did further work in 1953), successfully marrying a two-channel soundtrack to a composite release print for commercial release was a daunting task. Among the issues of the day was the fact that theater projection systems would need to be modified for dual photocell tubes, using a prism optical system of the type that normally would only be encountered on (expensive) studio reproducers. Further, standard mono soundtracks of the era did not have much HF response past 12.5 kHz, and signal-to-noise ratio was still rather abysmal when compared to magnetic soundtracks.

Ray Dolby and Ioan Allen, along with engineers at Dolby Labs, had already tackled these issues as they pertained to mono soundtracks, and applied the same engineering approach to a two-channel variable-area soundtrack on 35mm film. The issue of improved HF response was addressed by reducing the height of the scanning slit on the optical reproducer, and removing the low-pass filter typically employed during the recording of the optical soundtracks. In addition, the advent of the solar cell allowed for an improved optical reproducer system that did not require expensive optical splitters in the projector penthouse.

The first optical recorders (one in the UK and one in the United States) used for striking stereo negatives were based on the RCA dual galvo system as originally conceived by Uhlig and Leahy. Later on, Westrex RA-1231 optical recorders were rebuilt using a modified version of the four-string light valve that dated back to 1938.

At the same time, newer electronics for the optical recorders were developed, which allowed for better control of exposure, as well as improved driving of the low impedance ribbon light valves. Further improvement was accomplished by using analog delay lines to compensate for the slow response of the “ground noise reduction” system (known as GNR), employed in optical recording. The GNR system was required to control the area of exposed track, reducing the un-modulated track area to a narrow “bias line.” Adding a delay to the actual program audio fed to the galvo allowed for sufficient time for the NR shutters to open completely during heavily modulated passages, thereby preventing clipping of the first few cycles of the waveform.

The 3 dB of S/N ratio that was lost due to the halving the soundtrack area for stereo was compensated by the application of Dolby A noise reduction. Film labs made additional efforts to control “printer slip” and irregularities in soundtrack print densities, factors that contribute both HF loss and inter-modulation distortion. While the resulting soundtrack was still not as good a magnetic track, it was an improvement over the 40-year-old Academy tracks. Most importantly, laboratories could strike high-volume release prints using conventional printers and processing equipment.

That’s Great; Now How About a Couple More Channels?

At this point, engineers now had a working model for a standard twotrack, three-channel stereo recording that could be recorded and reproduced on film using a basic decoding matrix to extract the center channel. Looking back at these developments from the vantage point of 2012, it may not seem like much, but the engineering effort involved in upgrading a nearly 45-year-old format was no mean feat. However, for real-world application in the cinema, it was lacking in one crucial area: it needed four channels.

While consumer stereo systems of the era only employed two (or in the case of quad, four) channels of program material, film reproduction in large cinemas required at least three channels, preferably four. This was to provide for the center channel speaker needed to anchor dialog, as well as providing for audience coverage in large venues. The Fox Cinemascope magnetic system had four channels and the Todd-AO system had six. If stereo optical soundtracks were to be commercially viable, they would need to accommodate at least four independent channels to match the Left/Center/Right/Surround speaker systems already in place in many theaters.

Fortunately, as a result of the “Quadraphonic” fad of the early 1970s, there was a solution to be had. For those not familiar with consumer Quadraphonic (usually referred to as “Quad”), systems of the era, a bit of background is required: Thinking that consumers were yearning for something beyond just two channels, record companies began experimenting with four-channel surround sound for home reproduction. Like the VHS and Beta wars soon to be launched, there were competing systems introduced in the early 1970s. Of course, they were all incompatible with each other. Of these, there were only two using a matrix approach that had any real commercial acceptance. The first to be introduced was the “SQ” system, based on work originally done by Peter Scheiber in the late 1960s. It was first used by CBS for selected record releases in 1971 and was later adopted by at least 11 other record labels.

The competing system was called “QS” for Quadraphonic Sound, (later referred to as “RM” for “Regular Matrix”). How’s that for generating confusion in the marketplace?! Developed by engineer Isao Itoh at Sansui Electronics, it was conceptually similar to the Scheiber system but utilized a different algorithm to extract the encoded four channels from the two-channel source. Alas, the general public was not ready for quadrophonic sound and except for a diehard group of audio enthusiasts, interest in the format died out after about five years.

However, both systems allowed for four channels to be encoded onto a two-channel carrier, thereby allowing them to be adapted to the standard L/C/R/S speaker configuration already in place in theaters that had been converted to magnetic. (Note, however, that the speaker configuration and recording techniques used for consumer quadraphonic systems were completely different from cinema loudspeaker layouts.)

In the beginning, Dolby opted to use the QS matrix technology, resulting in the “3:2:3 matrix” system. The entire system process was dubbed “Dolby Stereo.” The QS matrix was originally employed by Dolby for the release of Lisztomania and several other films. 1976 saw the release of A Star Is Born, the first film to employ Dolby Stereo surround technology. QS was also used to generate the five-channel Quintaphonic magnetic soundtrack used on the film Tommy in 1975. However, Dolby abandoned the use of the QS matrix in 1978, opting for a custom built matrix that employed a variation on the Scheiber system, which was referred to as “MP Matrix.” This was the system that was employed for the release of Hair in 1978.

Star Wars

While movies like Lisztomania, A Star Is Born and a handful of others helped to generate industry buzz for the Dolby Stereo format, theaters were slow to jump on the bandwagon. Owners were reluctant to invest in yet another sound system after the demise of the Cinemascope four-track magnetic and 70mm six-track systems.

This all changed, however, with the release of George Lucas’s groundbreaking Star Wars in May of 1977, followed by Spielberg’s Close Encounters of the Third Kind in November of the same year. Now, theater owners began sit up and take notice. With more than $353,668,000 in combined domestic rentals, these two films were largely responsible for the sudden interest on the part of theater owners to adopt the Dolby Stereo system. (It should be noted that both of these films were also released in Dolby Stereo 70mm six-track magnetic for their road show engagements, with 35mm Dolby Stereo optical prints being struck for smaller markets and secondrun. In addition to ramping up sales of the Dolby SVA processors, it also helped to reinvigorate interest in 70mm for road show releases, using the modified format of Dolby Six Track Magnetic.)

When Star Wars opened in May of 1977, there were only 46 theaters in the U.S. equipped for Dolby Stereo. By the time Richard Donner’s Superman opened in December of 1978, there were 200 theaters and, within three years, that number increased tenfold to 2,000. Clearly, the folks at Dolby were onto something, and they went on to establish a firm foothold in both the domestic and international theatrical market, a position that they would enjoy exclusively until the release of the competing “Ultra Stereo” system in 1984.

One of the key aspects in acceptance of the Dolby Stereo SVA (for stereo variable area) system was the fact that prints struck in the format were backward compatible (in varying degrees) with the original mono Academy optical format. This reduced the need for dual print (optical and magnetic) inventories, greatly simplifying distribution. While it is likely that the format would still have won out even without this compatibility aspect, it went a long way in helping to convince both studios and theater owners of the long-term viability of the system.

A Few Little Problems…

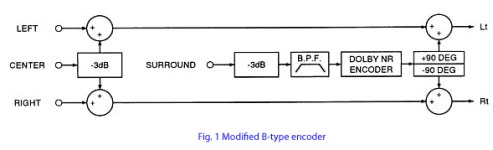

Despite the relative success of the Dolby Stereo variable area system, there remained a few issues. Chief among these was the poor separation between channels due to the limitations imposed by the surround decoder technology. To overcome this, Dolby employed a logic steering system, which would assist in “steering” the signal to the appropriate channel, thereby curtailing some of the problems of crosstalk between channels. However, this system required diligence during the mix to ensure that nothing ended up where it shouldn’t be due to the random phase relationship between channels. Therefore, all mixes destined for matrixed Dolby Stereo release employ a combination encoder/decoder on the dub stage to facilitate monitoring final results.

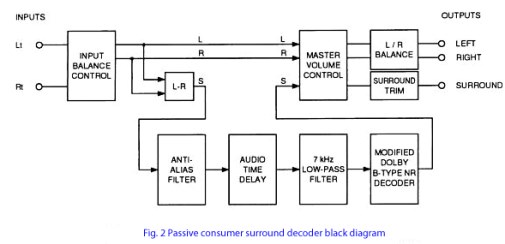

A further issue involved the quality of the surround channels, which is produced by taking the difference signal of the encoded left and right signal channels (called Lt and Rt in Dolby parlance), and routing it through a delay network in the playback processor to the auditorium surrounds. (The delay is always applied during reproduction instead of during the final mix, as every theater has different delay characteristics.)

The limitations of most auditorium surround speakers also made it necessary to limit the surround bandwidth to 100 Hz–7 kHz to avoid overloading the drivers. Due to the proximity of the speakers to the listener, combined with issues related to HF sibilance “splash” (caused by projection reproducer azimuth errors and uneven slit illumination), it was necessary to apply a further 6 db of noise reduction to the surround channel in the form of Dolby B.

While all this processing and signal manipulation may look inordinately complicated to some, it must be remembered that in 1975 the only other alternative available for getting four channels onto a film print involved expensive mag striping and sounding of prints, which also meant dual inventory for distribution.

While various improvements were made to the original MP matrix technologies over the years, the system still remains backward compatible with films made after about 1978.

What About the Folks at Home?

Having firmly established their brand in the cinema industry, Dolby wasted no time in applying the technology they had developed for theater applications to the consumer market. The arrival of the improved Hi-Fi versions of both Betamax and VHS in 1982 provided the first practical mass-market opportunity to distribute feature films with surround sound to the public for home viewing. With a catalog of film releases that dated back about seven years, Dolby saw an opportunity to exploit the market for movie aficionados who were craving something beyond standard two-channel reproduction (These were of rather poor quality in the early versions of both VHS and Betamax). Thus was born “Dolby Surround,” which was the moniker used to denote the consumer version of the Dolby Stereo cinema system. The major difference between the early consumer systems and the more sophisticated theater version was that the consumer version consisted of only three output channels; Left, Right and Mono Surround.

While this approach helped to provide a sense of spatial imaging for Dolby Stereo films, the lack of a separate center channel to anchor the dialog was a noticeable deficiency, which Dolby addressed with the release of the consumer version of Dolby Surround with Pro Logic in 1987. This system was licensed to various consumer audio manufacturers, who created packaged audio receivers employing the Pro Logic decoder. Further refinements were offered in 2000, when Dolby adopted technology developed by Jim Fosgate to encode and extract five channels of audio (Left, Center, Right and Stereo Surround).

While the Pro Logic system was the pre-cursor to the discrete 5.1 systems we enjoy today, the actual 5.1 channel format was first employed for the six-track 70mm releases of Superman and Apocalypse Now in 1979. The first use of 5.1 on 35mm film was the release of Batman Returns in Dolby Digital in 1992.

One reason for the rapid adoption of the Dolby Surround system was its backward compatibility with the earlier Academy mono systems that were still prevalent in the ’70s. This meant that films mixed in Dolby Stereo for cinema release could be directly mastered for home video using the matrixed two-channel (Lt/Rt) soundtrack masters. The only work typically required was to decode the Dolby A noise reduction used on the Lt/Rt magnetic print masters. This saved a considerable amount of money for studios wishing to license their back catalog into the burgeoning home-video market. Were it not for this, it is doubtful that Dolby Surround would have gained the market acceptance it did, which likely would have meant the public would have had to wait at least a few more years to enjoy the quality of sound that was already available to them at home.

Next: Digital sound comes to the movies

Many thanks to Ioan Allan of Dolby Labs and Ron Uhlig for their contributions to this article.

All photos and diagrams credited to Ron Uhlig/SMPTE were originally published in the SMPTE Journal, April 1973 , Volume 82, pp 292-295. DOI 10.5594/JO8887 Copyright 1973 SMPTE. Permission to reprint is gratefully acknowledged to the SMPTE and to Eastman Kodak, Ron Uhlig’s employer.

© 2012 Scott D. Smith CAS