by Scott D. Smith, CAS

In the previous issue of “When Sound Was Reel,” we examined the development of optical recording technologies for general 35mm film releases. In this installment, we cover the next generation of technology designed for discrete multi-channel theatrical exhibition.

Digital Comes to the Cinema

Although the development of Dolby Stereo, as conceived for encoding four channels of audio onto a two-channel release print, was a huge step forward in terms of sound quality for standard 35mm film releases, it could still not rival the quality of a good four-track or six-track magnetic release (at least when the film and heads were still in good condition). Besides the advantages of a superior signal to- noise ratio and wider bandwidth, discrete magnetic recording systems were also free of the compromises inherent to the 4-2-4 matrix, which meant for Dolby Stereo there would always be some crosstalk issues between channels.

While this issue was minimized by careful channel placement during re-recording, it was still a far cry from the luxury offered by independent mag tracks on either 35mm or 70mm film. This was not lost on the engineers at leading suppliers to the film industry, who realized that further development would be needed if they were going to maintain a lead in the marketplace.

Kodak Gets Into the Sound Business (Again)

Despite the early efforts Kodak made in developing stereo analog optical soundtracks (which later became the basis for Dolby SVA) in the late 1980s, they once again saw an opportunity to advance the state of the art when it came to sound for release prints. As 16-bit digital audio became an accepted standard for consumer audio, Kodak, in partnership with Optical Radiation Corporation, invested a significant amount of money in developing a six-channel system that could record discrete digital soundtracks onto standard 35mm prints.

Determining how much data could be jammed into the area presently occupied by the analog soundtrack was the first design hurdle. Kodak engineers worked on developing a new sound negative film stock that had sufficient resolution to encode a data block only 14Xm in (14 micrometers). While the print stock used during this era could support the miniscule size of the data block, a new fine grain negative stock (2374) was needed to handle the recording of the data. With this aspect of the system solved, they determined that a 16-bit PCM signal could be reliably encoded using data compression. In practice, the system eventually employed a Delta-Modulation scheme, whereby the original 16-bit audio was compressed onto a 12-bit word. Even with this compression scheme, though, the bit stream rate worked out to be 5.8 MB/per second, nearly four times the data rate of a standard audio CD. The resulting system was named the Kodak Cinema Digital Sound system (CDS for short).

This presented a real challenge when it came to reliably streaming data from a reader on a standard projector. Because of this, early installations incorporated modifications to the projector transports to provide more stable scanning of the optical track across the sound head.

After a period of initial development, Kodak and ORC premiered their system with the release of the film Dick Tracy in June of 1990, in both 35mm and 70mm versions, two years before the release of Batman Returns in Dolby Digital. While the system was generally well received, it had one fatal flaw: no backup track. Since the digital soundtrack occupied the full area previously inhabited by the analog soundtrack, this meant that any failure of the reader would result in no sound being heard at all. (It should be pointed out that the engineers involved in the development were in strong opposition to this approach, but management dismissed their concerns.) It was this aspect of the system (along with the nearly $20,000 theater conversion costs) that would ultimately spell its demise two years later, with only nine films having been released using the system.

Thus came to an end, Kodak’s second foray into sound recording systems for film.

Dolby Digital 1.0

In about 1988, nearly a decade after the release of Star Wars, Dolby engineers began development work on a completely new soundtrack format, one that would no longer rely solely on analog recording for release prints. At this juncture, nearly five years had passed since the introduction of the CD players into the consumer market, notably Sony’s CDP-101. Just as the quality of consumer audio systems outpaced the typical sound system found in theaters during the 1950s and 1960s, the introduction of digital audio to the marketplace would once again lead the film industry into a new series of engineering challenges.

As part of their engineering mandate (no doubt strengthened upon witnessing the demise of the Kodak/ORC system), Dolby made the two decisions pertaining to the Dolby Digital system design:

• The system had to be backwards compatible, and

• The soundtrack had to be carried on the film itself (i.e.: not on a separate medium, such as a separate interlocked player or dubber).

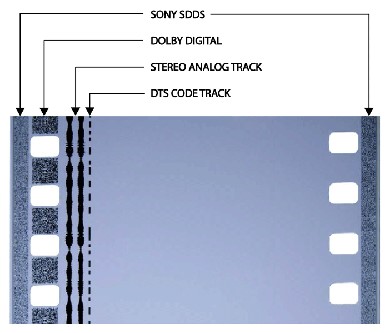

These mandates posed some serious constraints as to how much data could be recorded onto the film. Since the format had to be backwards compatible, this meant the existing optical soundtrack had to remain in place. Since there was no option for moving the picture image, this meant that the only significant area left was either between the perforations area and the outside of the film (an area about 3.4mm wide), or between the perforations themselves (about 2.8mm wide). Dolby engineers chose the latter as the area that held the most promise, reasoning that the area outside the sprockets was more prone to damage.

Moreover, Kodak (and others) used the area outside the sprockets for latent image key codes, making it unsuitable for soundtrack imaging. While the theory that the space between the perforations was a more protected had some merit (as experienced by Kodak with their CDS system), the unfortunate reality was that many film projectors still produced a significant amount of wear in the perforation area, making robust error correction and a backup analog soundtrack a must.

Despite these hurdles, Dolby engineers managed to produce a system that was quite reliable, given the constraints that they had to work with. In its original configuration, Dolby Digital consisted of a bit stream encoded at a constant rate of 320 kB per second, with a bit depth of 16-bits. While the bit rate was only about one-quarter that of a standard audio CD, it did for the first time, make possible a system which could record six discrete audio channels on a 35mm release print and was also backwards compatible with existing 35mm analog soundtracks.

However, despite Kodak’s exit from the market, Dolby engineers were not the only ones in the game when it came to digital soundtrack development.

DTS Throws Down the Gauntlet

While engineers were toiling away at Dolby, other entrepreneurs were looking at similar possibilities for marrying a digital soundtrack to film. A notable example was Terry Beard, who ran a small company called Nuoptix, which had specialized in producing upgraded recording electronics for analog optical recorders. These systems became the basis for many of the Dolby Stereo variable-area optical recording systems installed as Dolby Stereo achieved a greater market penetration.

Beard chose to take a slightly different approach to the conundrum of how to fit sufficient data onto the minuscule area available on standard 35mm prints. Instead of recording the audio signal directly onto the film, he chose instead to simply record timecode in the very small section between the picture frame and inside edge of the analog soundtrack. This timecode was in turn used to slave playback from a specially modified CD player that could carry up to six discrete channels of high-quality audio. Known as “double system” in industry parlance, this approach had been used in the past for specialty releases like Fantasia, all the Cinerama films, as well as and the original Vitaphone disk releases from Warner Bros. In general, this approach was not well received in the industry, due to problems associated with separate elements for picture and sound. Besides the possibility of an element becoming lost or separated, there were huge synchronization headaches.

Beard, however, was convinced of the viability of the format, and continued to press on in development of what would become known as DTS. After a chance encounter with Steven Spielberg, Beard had the opportunity to showcase the system to him in August of 1992. After some further work and demos to execs at Universal, Spielberg was convinced of the viability of the system, and by February of 1993, Digital Theater Systems was officially formed, with Spielberg himself signing on as one of the investors.

With Jurassic Park scheduled for release in June of that year, Beard and his team had only four scant months to assemble enough units to supply theaters to support the wide opening planned. Undaunted by this nearly impossible deadline, Beard and his staff managed to deliver 900 processors to theaters by the second week of the film’s run! Fortunately, most of the technology needed to actually go into production had already been vetted, so the primary hurdle was simply building enough units to supply the theaters.

While this “double system” approach was still not generally well received by distributors (disks could be lost or damaged), it did provide for high-quality reproduction of discrete soundtracks with a minimal amount of data compression. In the early 1990s, there were few options available for compressing audio data onto limited carriers. After reviewing the options, Beard chose a system developed by Audio Processing Technology (APT) out of Belfast, Northern Ireland. Data reduction is a tricky business. The APT system was unique in that it used only a predictive mathematic table to encode and reconstruct the data, as opposed to techniques employing “masking” of the signal. As the system offered an off-the-shelf solution, it made it very attractive to DTS, as it meant they didn’t have to develop their own data-reduction system.

A further feature of DTS was that it could seamlessly adjust for any missing frames in the film, automatically compensating for the lost timecode by providing a large buffer between the disk and the system output, which could make up for dropped frames.

In its original configuration, the DTS system had been designed in two versions; a full six-channel discrete system, as well as an economy two-channel version, which could utilize the same encoded Lt/Rt signal as analog Dolby Stereo. This was, in fact, the version that was delivered to most theaters during the initial Jurassic Park run. However, there were some problems involved with properly setting up the processors in this configuration, and in the end, it was decided that only six-channel systems would be installed.

The deployment of tracks was the same 5.1 approach as used by Dolby Digital, so the only expense incurred by theaters was the installation of the timecode reader on each projector, along with the DTS disk player.

OK, That’s Three. Let’s Add Another Format!

While most industry observers would likely contend that jamming three audio formats onto a single piece of film was probably sufficient, that is not the way the film business works. Not wanting to be left behind, execs at Sony/Columbia decided that they too needed to develop a multi-channel digital sound format for theater exhibition. However, by this point, space was running out on the print, so the only option landscape left was the area between the sprockets and the outside film edge, as well as a very small space between the picture frame and inside of the analog soundtrack (which was already occupied by the DTS timecode).

Undaunted by these constraints, Sony engineers contracted with Semetex Corp., a manufacturer of high-precision photodiode array devices, to design a system which could resolve miniscule amounts of data from the area between the sprockets and outside edge of the film. This system would become known as Sony Dynamic Digital Sound, or SDDS. However, unlike Dolby Digital and DTS, the system boasted eight independent channels of audio! In practice, however, few films ever took full advantage of the full eight-channel capability, due to the costs associated with both mixing and equipping theaters with additional speaker systems.

In its original implementation, the Sony system used a 7.1 speaker system. However, unlike 5.1, the Sony system utilized five fullrange screen channels, along with stereo surrounds, a layout similar to the original Todd-AO six-track 70mm format (with five screen channels but only mono surrounds). This was quite different from what eventually evolved into Dolby Digital 7.1.

Similar to both Dolby Digital and DTS, the system also required some data compression. To achieve the needed data rate, Sony utilized the ATRAC data compression scheme, which allowed for a compression ratio of about 5:1. Sony also provided for redundancy of the primary eight channels by including four backup channels, in case damage to the film caused data dropouts on the main channels. In practice, this proved to be a necessary feature of the system, as prints were frequently damaged by careless handling on platter systems.

Although Sony had originally planned to deploy the SDDS in December of 1991 for the release of Hook, the work needed to refine the system delayed its release an additional year and a half. It premiered instead with the release of Last Action Hero. Since Sony at that time owned its own theater chain (later sold to Loews), it could leverage its exhibition position to gain market penetration that would have otherwise been difficult to garner in the face of competition from Dolby and DTS. Further, via their ownership of Columbia/TriStar, they could create demand for the system by releasing all of their films with SDDS.

Alas, despite their advantage in the exhibition market, the only other theater chain that signed onto the SDDS system was the AMC chain, who struck a deal with Sony in 1994 to include the system in the new auditoriums they were constructing during their expansion phase. While the much touted eight-channel could theoretically offer an improved theater sound experience, the reality was that fewer than 100 films ultimately used the full capabilities of the format. Further, theaters were reluctant to invest in the needed hardware and speaker system upgrades necessary to realize the full potential of the system.

Although the system did gain favor with many of the studios for release of bigger budget films, most independent films during this period were being released primarily in Dolby Digital (and possibly DTS), which meant that the capabilities of the SDDS theaters went underutilized.

With market penetration stalled, and facing strong competition form Dolby and DTS, Sony ultimately made the decision to abandon manufacturing of the system in 2004. However, support for existing systems will continue until 2014, and new titles are still being released utilizing the SDDS format.

Review

With the variety of competing formats, it is interesting to take note of the bit stream rates and channel configurations employed by each of the competing systems:Kodak CDS System (for both 35mm and 70mm film):

Data Rate: 5.8 mb/sec

Sample Rate: 44.1 kHz

Bit Depth: 16 Bits

Data Compression: Delta Modulation

Channel Configuration:

Five Channel (5.1 with LFE) (Left/Center/Right/Left Surround/Right Surround)

Dolby Digital (for 35mm film):

Data Rate: 320 kb/sec

Sample Rate: 48 kHz

Bit Depth: 16 Bits

Data Compression: AC-3

Channel Configuration:

Mono (Center Channel)

Two-channel stereo (Left + Right)

Three-channel stereo (Left/Center/Right)

Three-channel with mono surround (Left/Right/Surround)

Four-channel with mono surround (Left/Center/Right/Surround)

Four-channel quadraphonic (Left/Right/Left Surround/Right Surround)

Five-channel surround (Left/Center/Right/Left Surround/Right Surround)

Additionally, each of these formats can utilize an extra-low-frequency channel (designated the “.1 channel”), which is usually assigned to a separate subwoofer.

Dolby also provides for 6.1 and 7.1 formats in the Dolby Digital Surround EX format, which implement mono rear surrounds and stereo rear surrounds respectively.

DTS

Data Rate: 1.536 mb/sec

Sample Rate: 44.1 kHz

Bit Depth: 16 Bits

Data Compression: APT-X100

Channel Configuration:

Five Channel (5.1 with LFE) (Left/Center/Right/Left Surround/Right Surround)

SDDS

Data Rate: 2.2 mb/sec

Sample Rate: 44.1 kHz

Bit Depth: 20 Bits

Data Compression: ATRAC2

Channel Configuration:

Five Channel (5.1 with LFE) (Left/Center/Right/Left Surround/Right Surround)

Seven Channel (7.1 with LFE) Left/Left Center/Center/Right Center/Right/Left Surround/Right Surround)

Virtually all of the systems boasted a bandwidth of 20-20kHz (for the primary channels), along with a noise floor that was virtually silent in even the best theaters. Further, they did away with the compromises inherent in the 4-2-4 matrix used for Dolby Stereo analog. While debate still rages among aficionados as to which of the systems sounds the best, there can be no doubt that all them provided a major step forward for sound reproduction in a theatrical environment.

© 2012 Scott D. Smith, CAS